Introduction

This page hosts documentations and specifications for some of the cryptographic algorithms of Mina. For the Rust documentation, see here.

Note that this book is a work in progress, not necessarily reflecting the current state of Mina.

Authors: Izaak Meckler, Vanishree Rao, Matthew Ryan, Anaïs Querol, Joseph Spadavecchia, David Wong, Xiang Xie

In memory of Vitaly Zelov.

Terminology

This document is intended primarily to communicate mathematical ideas to programmers with some experience of math.

To that end, we will often be ambivalent about the difference between sets and types (in whatever programming language, but usually I am imagining some idealized form of Rust or OCaml). So for example we may write

-

is a type

-

is a set

-

Ais a type

and these should all be interpreted the same way, assuming it makes sense in the context. Similarly, we may write either of the following, and potentially mean the same thing:

-

-

-

a : A

We use

- for function types

- for defining functions

Also, we usually assume functions are computed by a program (in whatever sense of “program” makes sense in the given context). So if we say “let ”, we typically mean that

-

and are types in some programming language which makes sense in the context (or maybe that they are sets, again depending on context)

-

is actually a program computing a function of the type (again, in whatever sense of program makes sense in context)

Groups

Groups are an algebraic structure which

-

are used in most widely deployed signature schemes

-

are used in most widely deployed zk-SNARKs

-

generalize the notion of a collection of symmetries of an object

-

generalize certain aspects of numbers

I will not touch on the last two too much.

First let’s do a quick definition and then some examples.

Definition. A group is a set with a binary operation , an identity for that operation, and an inverse map such that

-

is associative: for all .

-

is an identity for . I.e., for all .

-

is an inverse map for with identity . I.e., for all .

So basically, an invertible binary operation. Definition in hand, we can see some examples:

-

The integers with , as identity, and negation as inverse.

-

For any natural number , the integers mod . This can be thought of as the set of numbers with the operation being followed by taking remainder mod . It can also be thought of as the group of rotations of an -gon.

-

For any set , the set of invertible functions (a.k.a permutations) with function composition as the operation, the identity function as the identity, and function inverse as the inverse operation.

-

The set of translations of a plane with composition of translations (since the composition of translations is a translation). The identity is translating by and inverse is reversing the translation. This is equivalent to the group with coordinate-wise addition as the operation and coordinate-wise negation as the inverse.

-

The set of rotations of a sphere with composition as the operation, doing nothing as the identity, and performing the rotation in reverse as the inverse map.

-

For any field , the set , with field multiplication as the operation, as the identity, and as the inverse map. This is called the group of units of .

Sometimes, instead of we simply write when is clear from context.

Abelian groups

An abelian group is a group in which is commutative, meaning . Typically, in that case, we write the group operation as instead and we write the identity as .

Some examples are

-

the integers with addition

-

the integers mod with addition

-

the group of units of a field

-

the real numbers with addition

-

the rational numbers with addition

-

the real numbers with multiplication

-

the rational numbers with multiplication

-

vectors of integers of some fixed dimension with coordinate-wise addition

-

The set of polynomials over a ring , with addition

Abelian groups are equivalently described as what are called -modules. A -module is like a vector space, but with the integers instead of a field.

Basically a -module (or equivalently an Abelian group) is a structure where you can add elements and where it makes sense to multiply a group element by an integer.

If is an abelian group, we can define this “multiplication by an integer” as follows. If , then for , we can define by

and if , then define . Or equivalently.,

This is the general sense of what is called scalar-multiplication or sometimes exponentiation in cryptography.

Cyclic groups

A cyclic group is a special kind of abelian group. It is an abelian group generated by a single element . That is, a cyclic group (generated by ) is one in which for every we have for some .

Groups in cryptography

Many cryptographic protocols are defined generically with respect to any abelian group that has a “hard discrete log”.

Let

-

be a cyclic group

-

a generator of

-

a probability distribution on

-

a set of algorithms of type with runtime and memory usage bounds. In other words, a set of tuples where is a program of type , is a bound on the time that program can be run, and is a bound on the amount of memory that program can use.

In practice you fix this to be something like, the set of all computations that can be run for less than 1 trillion $.

-

a probability, usually taken to be something close to 0 like

Then we can define the -computational discrete-log assumption which says:

For any , if you sample h from G according to , then the probability that is smaller than , assuming successfully runs within the given resource bounds.

Sometimes this is called the computational Diffie–Helman assumption. Basically what it’s saying is that for a group element sampled randomly, you can’t practically compute how many times you have to add to itself to get .

Another really important assumption is the no-relation assumption (TODO: name).

Basically what this says is that randomly selected group elements have no efficiently computable linear relations between them. Formally, letting be a group and a probability distribution on , and a set of programs (with resource bounds) that take in a list of group elements as inputs and outputs a list of integers of the same length.

Then the -no-relation assumption says for all , for any , if you sample according to , letting , it is not the case that

except with probability (assuming program runs in time with memory ).

Generic group model

Elliptic curves

Now, what are some concrete groups that we can safely make the no-relation or computational discrete log hardness assumptions about?

Well, the most efficiently implementable groups that people have come up with – and that we believe satisfy the above assumptions for being the class of realistic computations and being something like – are elliptic curves over finite fields.

Giving a complete definition of what an elliptic curve is requires a lot of math, and is not very useful from the point of view of cryptography. So we will give a definition that is not complete, but more useful.

An elliptic curve over a field is a set of the form

for some , plus an additional point which is called the point at infinity. Basically it’s a set of pairs satisfying some equation of that form. The data of the elliptic curve itself is just the field together with the constants and .

What is special about elliptic curves is that there is a way of giving them a group structure with simple to compute operations that we believe satisfy the hardness assumptions described above.

Group negation is defined by

so we just negate the coordinate.

The identity for the group is , the point at infinity. For that point we may also therefore write it as .

Group addition is more complicated to define, so we will not, but here is what’s worth knowing about how to compute

-

There are three cases

-

-

and

-

but . In this case it turns out and so the two points are inverse, and we return

In cases 1 and 2, the algorithm to compute the result just performs a simple sequence of some field operations (multiplications, additions, divisions, subtractions) with the input values. In other words, there is a simple arithmetic formula for computing the result.

-

Elliptic curves in Rust

Elliptic curves in Sage

Serializing curve points

Given a curve point we know and thus is one of the two square roots of . If is a given square root, the other square root is since . So, if we know , then we almost know the whole curve point: we just need a single bit to tell us which of the two possible values (i.e., the two square roots of ) is the y coordinate.

Here is how this is commonly done over prime order fields , assuming . Remember that we represent elements of as integers in the range . In this representation, for a field element , is the integer (or if ). Thus, if is odd, then is even (since is odd and an odd minus an odd is even). Similarly, if is even, then is odd (since an odd minus an even is odd).

So, for any , unless is 0, and have opposite parities. Parity can easily be computed: it’s just the least significant bit. Thus, we have an easy way of encoding a curve point . Send

-

in its entirety

-

The least significant bit of

Given this, we can reconstruct as follows.

-

Compute any square root of

-

If the least significant bit of is equal to , then , otherwise,

In the case of Mina, our field elements require 255 bits to store. Thus, we can encode a curve point in bits, or 32 bytes. At the moment this optimized encoding is not implemented for messages on the network. It would be a welcome improvement.

Projective form / projective coordinates

The above form of representing curve points as pairs – which is called affine form – is sometimes sub-optimal from an efficiency perspective. There are several other forms in which curve points can be represented, which are called projective forms.

The simple projective form represents a curve as above as the set

If you think about it, this is saying that is a point on the original curve in affine form. In other words, in projective form we let the first two coordinates get scaled by some arbitrary scaling factor , but we keep track of it as the third coordinate.

To be clear, this means curve points have many different representations. If is a curve point in projective coordinates, and is any element of , then is another representation of the same curve point.

This means curve points require more space to store, but it makes the group operation much more efficient to compute, as we can avoid having to do any field divisions, which are expensive.

Jacobian form / Jacobian coordinates

There is another form, which is also sometimes called a projective form, which is known as the jacobian form. There, a curve would be represented as

so the triple corresponds to the affine point . These are the fastest for computing group addition on a normal computer.

Take aways

-

Use affine coordinates when the cost of division doesn’t matter and saving space is important. Specific contexts to keep in mind:

- Working with elliptic curve points inside a SNARK circuit

-

Use Jacobian coordinates for computations on normal computers, or other circumstances where space usage is not that costly and division is expensive.

-

Check out this website for the formulas for implementing the group operation.

More things to know

- When cryptographers talk about “groups”, they usually mean a “computational group”, which is a group equipped with efficient algorithms for computing the group operation and inversion. This is different because a group in the mathematical sense need not have its operations be computable at all.

Exercises

-

Implement a type

JacobianPoint<F:Field>and functions for computing the group operation -

Familiarize yourself with the types and traits in

ark_ec. Specifically,- todo

-

Implement

fn decompress<F: SquareRootField>(c: (F, bool)) -> (F, F)

Rings

A ring is like a field, but where elements may not be invertible. Basically, it’s a structure where we can

-

add

-

multiply

-

subtract

but not necessarily divide. If you know what polynomials are already, you can think of it as the minimal necessary structure for polynomials to make sense. That is, if is a ring, then we can define the set of polynomials (basically arithmetic expressions in the variable ) and think of any polynomial giving rise to a function defined by substituting in for in and computing using and as defined in .

So, in full, a ring is a set equipped with

such that

-

gives the structure of an abelian group

-

is associative and commutative with identity

-

distributes over . I.e., for all .

Intro

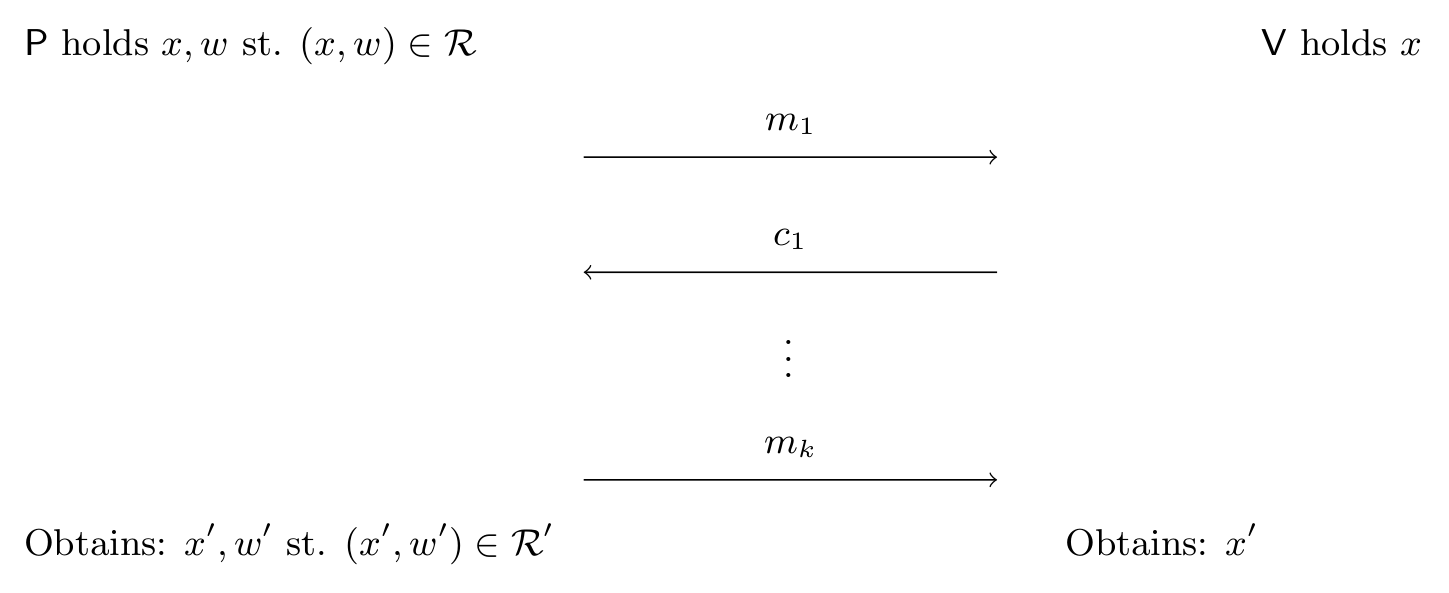

The purpose of this document is to give the reader mathematical, cryptographic, and programming context sufficient to become an effective practitioner of zero-knowledge proofs and ZK-SNARKs specifically.

Some fundamental mathematical objects

In this section we’ll discuss the fundamental objects used in the construction of most ZK-SNARKs in use today. These objects are used extensively and without ceremony, so it’s important to understand them very well.

If you find you don’t understand something at first, don’t worry: practice is often the best teacher and in using these objects you will find they will become like the bicycle you’ve had for years: like an extension of yourself that you use without a thought.

Fields

A field is a generalization of the concept of a number. To be precise, a field is a set equipped with

-

An element

-

An element

-

A function

-

A function

-

A function

-

A function

Note that the second argument to cannot be . We write these functions in the traditional infix notation writing

-

or for

-

for

-

for

-

for

and we also write for and for .

Moreover all these elements and functions must obey all the usual laws of arithmetic, such as

-

-

-

-

-

-

-

if and only if , assuming .

-

-

In short, should be an abelian group over with as identity and as inverse, should be an abelian group over with as identity and as inverse, and addition should distribute over multiplication. If you don’t know what an abelian group is, don’t worry, we will cover it later.

The point of defining a field is that we can algebraically manipulate elements of a field the same way we do with ordinary numbers, adding, multiplying, subtracting, and dividing them without worrying about rounding, underflows, overflows, etc.

In Rust, we use the trait

Fieldto represent types that are fields. So, if we haveT : Fieldthen values of typeTcan be multiplied, subtracted, divided, etc.

Examples

The most familiar examples of fields are the real numbers and the rational numbers (ratios of integers). Some readers may also be friends with the complex numbers which are also a field.

The fields that we use in ZKPs, however, are different. They are finite fields. A finite field is a field with finitely many elements. This is in distinction to the fields , and , which all have infinitely many elements.

What are finite fields like?

In this section we’ll try to figure out from first principles what a finite field should look like. If you just want to know the answer, feel free to skip to the next section.

Let’s explore what a finite field can possibly be like. Say is a finite field. If is a natural number like or we can imagine it as an element of by writing .

Since is finite it must be the case that we can find two distinct natural numbers which are the same when interpreted as elements of .

Then, , which means the element is . Now that we have established that if you add to itself enough times you get , let be the least natural number such that if you add to itself times you get .

Now let’s show that is prime. Suppose it’s not, and . Then since in , . It is a fact about fields (exercise) that if then either is 0 or is 0. Either way, we would then get a natural number smaller than which is equal to in , which is not possible since is the smallest such number. So we have demonstrated that is prime.

The smallest number of times you have to add to itself to get 0 is called the characteristic of the field . So, we have established that every finite field has a characteristic and it is prime.

This gives us a big hint as to what finite fields should look like.

Prime order fields

With the above in hand, we are ready to define the simplest finite fields, which are fields of prime order (also called prime order fields).

Let be a prime. Then (pronounced “eff pee” or “the field of order p”) is defined as the field whose elements are the set of natural numbers .

-

is defined to be

-

is defined to be

-

-

-

-

Basically, you just do arithmetic operations normally, except you take the remainder after dividing by . This is with the exception of division which has a funny definition. Actually, a more efficient algorithm is used in practice to calculate division, but the above is the most succinct way of writing it down.

If you want, you can try to prove that the above definition of division makes the required equations hold, but we will not do that here.

Let’s work out a few examples.

2 is a prime, so there is a field whose elements are . The only surprising equation we have is

Addition is XOR and multiplication is AND.

Let’s do a more interesting example. 5 is a prime, so there is a field whose elements are . We have

where the last equality follows because everything is mod 5.

We can confirm that 3 is in fact by multiplying 3 and 2 and checking the result is 1.

so that checks out.

In cryptography, we typically work with much larger finite fields. There are two ways to get a large finite field.

-

Pick a large prime and take the field . This is what we do in Mina, where we use fields in which is on the order of .

-

Take an extension of a small finite field. We may expand this document to talk about field extensions later, but it does not now.

Algorithmics of prime order fields

For a finite field where fits in bits (i.e., ) we have

-

Addition, subtraction:

-

Multiplication

-

Division I believe, in practice significantly slower than multiplication.

Actually, on a practical level, it’s more accurate to model the complexity in terms of the number of limbs rather than the number of bits where a limb is 64 bits. Asymptotically it makes no difference but concretely it’s better to think about the number of limbs for the most part.

As a result you can see it’s the smaller is the better, especially with respect to multiplication, which dominates performance considerations for implementations of zk-SNARKs, since they are dominated by elliptic curve operations that consist of field operations.

While still in development, Mina used to use a field of 753 bits or 12 limbs and now uses a field of 255 bits or 4 limbs. As a result, field multiplication became automatically sped up by a factor of , so you can see it’s very useful to try to shrink the field size.

Polynomials

The next object to know about is polynomials. A polynomial is a syntactic object that also defines a function.

Specifically, let be a field (or more generally it could even be a ring, which is like a field but without necessarily having division, like the integers ). And pick a variable name like . Then (pronounced, “R adjoin x” or “polynomials in x over R” or “polynomials in x with R coefficients”) is the set of syntactic expressions of the form

where each and is any natural number. If we wanted to be very formal about it, we could define to be the set of lists of elements of (the lists of coefficients), and the above is just a suggestive way of writing that list.

An important fact about polynomials over is that they can be interpreted as functions . In other words, there is a function defined by

where is just a variable name without a value assigned to it and maps it to a field element and evaluates the function as the inner product between the list of coefficients and the powers of .

It is important to remember that polynomials are different than the functions that they compute. Polynomials are really just lists of coefficients. Over some fields, there are polynomials and such that , but .

For example, consider in the polynomials and . These map to the same function (meaning for it holds that ), but are distinct polynomials.

Some definitions and notation

If is a polynomial in and , we write for

If is a polynomial, the degree of is the largest for which the coefficient of is non-zero in . For example, the degree of is 10.

We will use the notation and for the set of polynomials of degree less-than and less-than-or-equal respectively.

Polynomials can be added (by adding the coefficients) and multiplied (by carrying out the multiplication formally and collecting like terms to reduce to a list of coefficients). Thus is a ring.

Fundamental theorem of polynomials

An important fact for zk-SNARKs about polynomials is that we can use them to encode arrays of field elements. In other words, there is a way of converting between polynomials of degree and arrays of field elements of length .

This is important for a few reasons

-

It will allow us to translate statements about arrays of field elements into statements about polynomials, which will let us construct zk-SNARKs.

-

It will allow us to perform certain operations on polynomials more efficiently.

So let’s get to defining and proving this connection between arrays of field elements.

The first thing to understand is that we won’t be talking about arrays directly in this section. Instead, we’ll be talking about functions where is a finite subset of . The idea is, if has size , and we put an ordering on , then we can think of a function as the same thing as an immutable array of length , since such a function can be thought of as returning the value of an array at the input position.

With this understanding in hand, we can start describing the “fundamental theorem of polynomials”. If has size , this theorem will define an isomorphism between functions and , the set of polynomials of degree at most .

Now let’s start defining this isomorphism.

One direction of it is very easy to define. It is none other than the evaluation map, restricted to :

We would now like to construct an inverse to this map. What would that mean? It would be a function that takes as input a function (remember, basically an array of length ), and returns a polynomial which agrees with on the set . In other words, we want to construct a polynomial that interpolates between the points for .

Our strategy for constructing this polynomial will be straightforward. For each , we will construct a polynomial that is equal to when evaluated at , and equal to when evaluated anywhere else in the set .

Then, our final polynomial will be . Then, when is evaluated at , only the term will be non-zero (and equal to as desired), all the other terms will disappear.

Constructing the interpolation map requires a lemma.

Lemma. (construction of vanishing polynomials)

Let . Then there is a polynomial of degree such that evaluates to on , and is non-zero off of . is called the vanishing polynomial of .

Proof. Let . Clearly has degree . If , then since is one of the terms in the product defining , so when we evaluate at , is one of the terms. If , then all the terms in the product are non-zero, and thus is non-zero as well.

Now we can define the inverse map. Define

Since each has degree , this polynomial has degree . Now we have, for any ,

Thus is the identity.

So we have successfully devised a way of interpolating a set of points with a polynomial of degree .

What remains to be seen is that these two maps are inverse in the other direction. That is, that for any polynomial , we have

This says that if we interpolate the function that has the values of on , we get back itself. This essentially says that there is only one way to interpolate a set of points with a degree polynomial. So let’s prove it by proving that statement.

Lemma: polynomials that agree on enough points are equal.

Let be a field and suppose have degree at most . Let have size .

Suppose for all , . Then as polynomials.

Proof. Define . Our strategy will be to show that is the zero polynomial. This would then imply that as polynomials. Note that vanishes on all of since and are equal on .

To that end, let . Then we can apply the polynomial division algorithm to divide by and obtain polynomials such that and has degree less than 1. I.e., is a constant in .

Now, so and thus .

Note that is 0 on all elements with since , but .

Thus, if we iterate the above, enumerating the elements of as , we find that

Now, if is not the zero polynomial, then will have degree at least since it would have as a factor the linear terms . But since both have degree at most and , has degree at most as well. Thus, , which means as well.

This gives us the desired conclusion, that as polynomials.

Now we can easily show that interpolating the evaluations of a polynomial yields that polynomial itself. Let . Then is a polynomial of degree at most that agrees with on , a set of size . Thus, by the lemma, they are equal as polynomials. So indeed for all .

So far we have proven that and give an isomorphism of sets (i.e., a bijection) between the sets and .

But we can take this theorem a bit further. The set of functions can be given the structure of a ring, where addition and multiplication happen pointwise. I.e., for we define and . Then we can strengthen our theorem to say

Fundamental theorem of polynomials (final version)

Let and let with . With

defined as above, these two functions define an isomorphism of rings.

That is, they are mutually inverse and each one respects addition, subtraction and multiplication.

The fundamental theorem of polynomials is very important when it comes to computing operations on polynomials. As we will see in the next section, the theorem will help us to compute the product of degree polynomials in time , whereas the naive algorithm takes time . To put this in perspective, if , is times larger than and the gap only gets bigger as grows.

Schwartz–Zippel lemma

Computer representation

There are three common representations for polynomials used in computer implementations.

-

Dense coefficient form. A degree polynomial is represented as a vector of length of all the coefficients. Entry of the vector corresponds to the coefficient . This corresponds to the

DensePolynomialtype in arkworks. Sometimes this is also described as writing the polynomial “in the monomial basis”, because it amounts to writing the polynomial as a linear combination of the monomials . -

Sparse coefficient form. If a polynomial does not have very many non-zero coefficients, the above representation is wasteful. In sparse coefficient form, you represent a polynomial as a vector (or potentially a hash-map) of pairs

(usize, F)whereFis the type of coefficients. The polynomial corresponding to the list[(i_0, b_0), ..., (i_n, b_n)]is -

Evaluation form. We fix an index set , with , and represent a polynomial as the vector

[f(a_0), ..., f(a_d)]. By the fundamental theorem of polynomials, this is a valid way of representing the polynomials, since the coefficients form can be obtained by using the function.

The evaluation form is very important. The reason is that multiplying two polynomials in the evaluation form takes time . You just multiply the two vectors entry-wise. By contrast, the coefficient forms naively require time to multiply.

Now, there is a trick. For certain sets , we can efficiently translate between the dense coefficients form and the evaluation form. That is, for certain , the functions and can be computed more efficiently than .

Multiplying polynomials

The algorithm that allows us to multiply polynomials in is called the Cooley-Tukey fast Fourier transform, or FFT for short. It was discovered by Gauss 160 years earlier, but then separately rediscovered and publicized by Cooley-Tukey.

The heart of Cooley-Tukey FFT is actually about converting between coefficient and evaluation representations, rather than the multiplication itself. Given polynomials and in dense coefficient representation, it works like this.

- Convert and from coefficient to evaluation form in using Cooley-Tukey FFT

- Compute in evaluation form by multiplying their points pairwise in

- Convert back to coefficient form in using the inverse Cooley-Tukey FFT

The key observation is that we can choose any distinct evaluation points to represent any degree polynomial. The Cooley-Tukey FFT works by selecting points that yield an efficient FFT algorithm. These points are fixed and work for any polynomials of a given degree.

The next section describes the Cooley-Tukey FFT in detail.

Fast Fourier Transform (FFT)

This section describes how the Cooley-Tukey fast Fourier transform works. As we learned in the previous section, the key is to select evaluation points that yield an efficient FFT algorithm.

Specifically, say we have such that , and for any .

Put another way, all the values are distinct and .

Put yet another way, the group generated by inside (written ) has size .

We call such an a primitive -th root of unity.

Suppose we have an which is a primitive th root of unity and let .

The FFT algorithm will let us compute for this set.

Actually, it is easier to see how it will let us compute the algorithm efficiently.

We will describe an algorithm that takes as input

- a primitive th root of unity

- in dense coefficients form (i.e., as a vector of coefficients of length ).

and outputs the vector of evaluations and does it in time (which is to say, if ).

Notice that naively, computing each evaluation using the coefficients of would require time , and so computing all of them would require time .

The algorithm can be defined recursively as follows.

If , then is a primitive st root of unity, and is a polynomial of degree . That means and also is a constant . So, we can immediately output the array of evaluations .

If , then we will split into two polynomials, recursively call on them, and reconstruct the result from the recursive calls.

To that end, define to be the polynomial whose coefficients are all the even-index coefficients of and the polynomial whose coefficients are all the odd-index coefficients of . In terms of the array representation, this just means splitting out every other entry into two arrays. So that can be done in time .

Write , so that and . Then

Now, notice that if is a th root of unity, then is a th root of unity. Thus we can recurse with and similarly for . Let

By assumption . So, for any we have

Now, since may be larger than , we need to reduce it mod , relying on the fact that if is an th root of unity then since . Thus, and so we have

We can compute the array in time (since each entry is the previous entry times ). Then we can compute each entry of the output in as

There are such entries, so this takes time .

This concludes the recursive definition of the algorithm .

Algorithm: computing

- the coefficients of polynomial

- the even coefficients of corresponding to

- the odd coefficients of corresponding to

Now let’s analyze the time complexity. Let be the complexity on an instance of size (that is, for ).

Looking back at what we have done, we have done

- for computing and

- two recursive calls, each of size

- for computing the powers of

- for combining the results of the recursive calls

In total, this is . Solving this recurrence yields . Basically, there are recursions before we hit the base case, and each step takes time .

Now, in practice there are ways to describe this algorithm non-recursively that have better concrete performance, but that’s out of scope for this document. Read the code if you are interested.

Using the FFT algorithm to compute

So far we have a fast way to compute all at once, where is the set of powers of a th root of unity . For convenience let .

Now we want to go the other way and compute a polynomial given an array of evaluations. Specifically, evaluations uniquely define a degree polynomial. This can be written as a system of equations

which can be rewritten as a matrix vector product. This matrix is a Vandermonde matrix and it just so happens that square Vandermonde matrices are invertible, iff the are unique. Since we purposely selected our to be the powers of , a primitive -th root of unity, by definition are unique.

Therefore, to compute the polynomial given the corresponding array of evaluations (i.e. interpolation) we can solve for the polynomial’s coefficients using the inverse of the matrix. All we need now is the inverse of this matrix, which is slightly complicated to compute. I’m going to skip it for now, but if you have the details please make a pull request.

Substituting in the inverse matrix we obtain the equation for interpolation. Observe that this equation is nearly identical to the original equation for evaluation, except with the following substitution. Consequently and perhaps surprisingly, we can reuse the FFT algorithm in order to compute the inverse– .

So, suppose we have an array of field elements (which you can think of as a function ) and we want to compute the coefficients of a polynomial with .

To this end, define a polynomial by . That is, the polynomial whose coefficients are the evaluations in our array that we’re hoping to interpolate.

Now, let .

That is, we’re going to feed into the FFT algorithm defined above with as the th root of unity. It is not hard to check that if is an n-th root of unity, so is . Remember: the resulting values are the evaluations of on the powers of , so .

Now, let . That is, re-interpret the values returned by the FFT as the coefficients of a polynomial. I claim that is almost the polynomial we are looking for. Let’s calculate what values takes on at the powers of .

Now, let’s examine the quantity . We claim that if , then , and if , then . The first claim is clear since

For the second claim, we will prove that . This implies that . So either or . The former cannot be the case since it implies which in turn implies which is impossible since we are in the case . Thus we have as desired.

So let’s show that is invariant under multiplication by . Basically, it will come down to the fact that .

So now we know that

So if we define , then for every as desired. Thus we have our interpolation algorithm, sometimes called an inverse FFT or IFFT:

Algorithm: computing

Input: the points we want to interpolate and a th root of unity.

Interpret the input array as the coefficients of a polynomial .

Let .

Output the polynomial . I.e., in terms of the dense-coefficients form, output the vector .

Note that this algorithm also takes time

Takeaways

-

Polynomials can be represented as a list of coefficients or a list of evaluations on a set

-

If the set is the set of powers of a root of unity, there are time algorithms for converting back and forth between those two representations

-

In evaluations form, polynomials can be added and multiplied in time

- TODO: caveat about hitting degree

Exercises

-

Implement types

DensePolynomial<F: FfftField>andEvaluations<F: FftField>that wrap aVec<F>and implement the FFT algorithms described above for converting between them -

Familiarize yourself with the types and functions provided by

ark_poly

Commitments

A “commitment scheme” is a cryptographic scheme that lets us provide a “commitment” to a given piece of data, in such a way that we can later “open” that commitment.

I recommend checking out section 2.4.1 of David’s book Real-World Cryptography. There are two properties we typically expect of our commitment schemes:

-

Hiding. A commitment to a piece of data should not reveal anything about that data.

-

Binding. There should be no ambiguity about what data is committed to. That is, it should not be possible for the committer to open the commitment to a piece of data other than the one they originally committed to.

There are various kinds of commitments that allow opening in different ways, revealing only part of the data, or some function of the data. Sometimes it is even useful to elide the hiding property entirely— so-called non-hiding commitments.

Simple commitments

The simplest kind of a commitment is one in which opening reveals all of the underlying data. Let’s give a simple construction of such a scheme for committing to field elements. Suppose we have

-

a prime order field, with being rather large (say on the order of ).

-

A hash function .

Then we define

The argument of the function is data that must only be known to the committer (until the commitment is opened). When a committer wants to commit to a field element , they sample a random “blinder” and hash it together with to form the commitment.

To open the commitment , they simply provide the committed value together with the blinder. Alternatively, if the verifier already knows , they can just provide , i.e. . Finally, given the commitment and the opening, we can verify whether the input was the value originally committed to using the function.

If the hash function is collision-resistant, then this function is binding (because there’s no way the committer could find another preimage of ).

If the hash function is one-way, then this commitment is also hiding (assuming is revealed only as part of the opening).

Commitments are often used in protocols between provers and verifiers. The following illustration provides an example with a prover named Peggy and a verifier named Victor.

Here Peggy commits to an input using a blinder, obtains the commitment and sends it to Victor. The interlocutors continue their protocol, but eventually to convince Victor of her claims, Peggy must send the opening proof to her earlier commitment. Victor verifies the opening (i.e. the input and blinder) against the commitment. If the verification fails, then Victor knows that Peggy was trying to trick him, otherwise Victor has sufficient assurances that Peggy was telling the truth.

Algebraic and homomorphic commitments

Instead of a cryptographic hash function, we can use elliptic curve scalar multiplication to construct a commitment scheme. Here scalar multiplication is used like a one-way function based on the hardness assumption of the elliptic curve discrete logarithm problem (ECDLP). Suppose we have

- a prime order field, with being large (e.g. something like ).

- Publicly agreed generator point over an elliptic curve

- Another publicly agreed curve point for which no one knows the discrete logarithm

where is the value being committed to, is a random blinding factor and the commitment is a curve point.

These commitments are algebraic (i.e. they do not use a boolean-based cryptographic hash function) and have homomorphic properties: you can add commitments together to form another commitment of the added committed values. For example, if you have commitments and , you can perform:

In other words, the sum of commitments and is equal to the commitment of the sum of the two committed values and and blinders and . This is possible because in such a scheme scaling is commutative with adding scalars.

As a cryptographic primitive, the ability to find a public curve point for which no one knows the discrete logarithm may, at first, seem rather mind-blowing and powerful.

Actually, it’s as easy as it is awesome to find such a point— simply perform rejection sampling by cryptographically hashing (or, respectively, the hash output), using the output as the -coordinate of a candidate point on and checking whether it’s valid. The first valid curve point obtained is and by the hardness assumption of the ECDLP, no one knows it.

Since approximately half of the hash outputs will be valid curve points on , sampling will terminate very quickly. Indeed, as we will see later, this process can be used to sample many public curve points for which the discrete logarithms are unknown; the so-called hash to curve algorithm.

Pedersen commitments

The homomorphic commitment described above is known as a Pedersen commitment. If you remove the term you get a non-hiding commitment, called a Pedersen hash. Both rely on the ECDLP hardness assumption.

This means that, at least theoretically, you might be lucky (or have a quantum computer) and figure out that , which would allow you to find different values and to open the commitment. We say that pedersen commitments are computationally binding and not unconditionally binding. For example, you could express alternatively as and compute a satisfying opening pair and .

On the other hand, Pedersen commitments are unconditionally hiding, as there is no way (even with a magic computer) to reveal what is without knowing . Lack of perfect binding is the reason why most of the “proofs” we will see later in this book are not referred to as proofs, but instead are referred to as arguments of knowledge (although we may care little about this distinction). Just remember that you need perfect binding to be called a proof.

Interestingly, it is impossible to have a commitment scheme that has both perfect hiding and perfect binding.

To recap, in cryptography the following distinctions are important

-

Perfect. The property that an algorithm is statistically sound without hardness assumptions, also known as unconditional or statistical soundness.

-

Computational. The algorithm relies on a hardness assumption or computational limitation for soundness.

Thus, said another way, Pedersen commitments provide perfect hiding and computational binding.

Vector commitments

We can commit to several values by sending separate Pedersen commitments to all of these values as such:

But we can instead batch/aggregate all of these commitments together as a single commitment:

with independent bases with unknown discrete logarithms.

If you represent s and the s as two vectors and , we can quickly write the previous statement as an inner product

Vector commitments (sometimes referred to as multi-commitments) are a powerful construction because an arbitrarily large vector can be committed with a single curve point.

The naive approach to constructing an opening proof for a length vector commitment has size . It is simply the tuple . As we will see later, opening proofs for vector commitments is an interesting topic and there is a much more efficient algorithm.

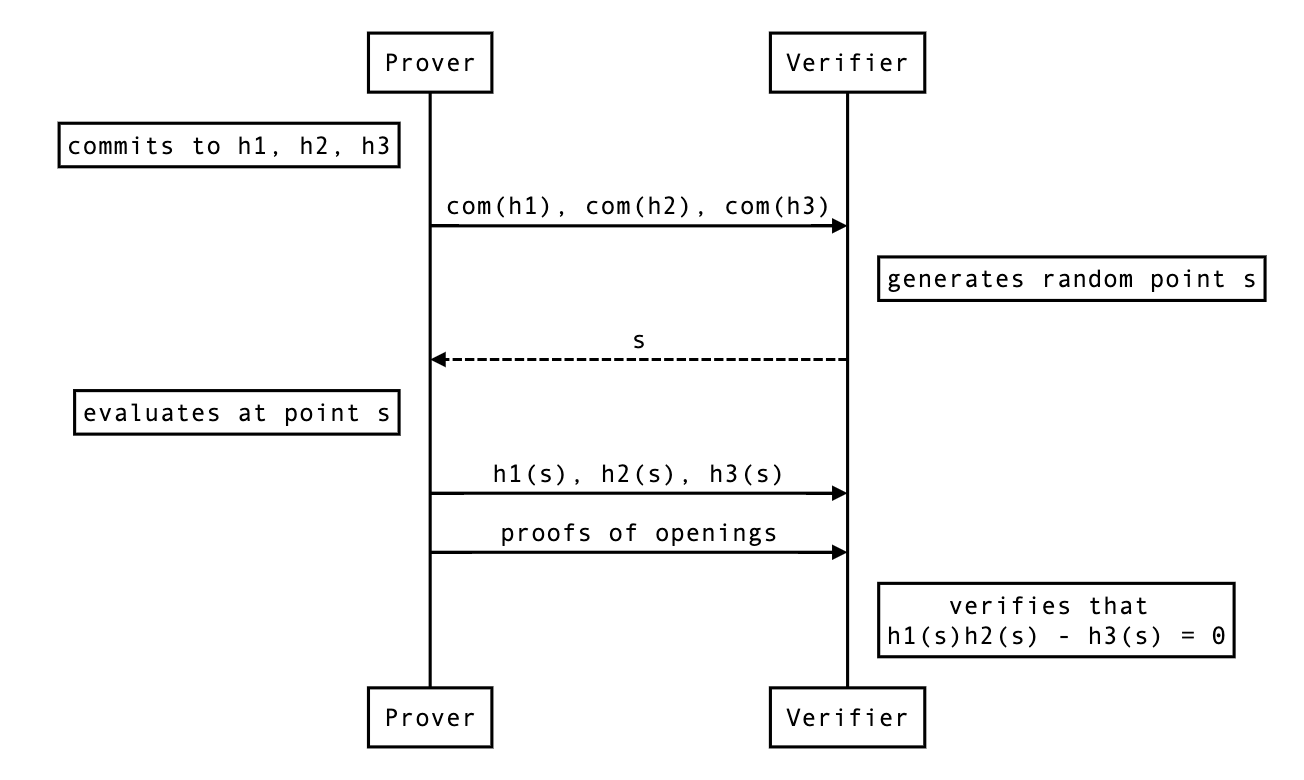

Polynomial commitments

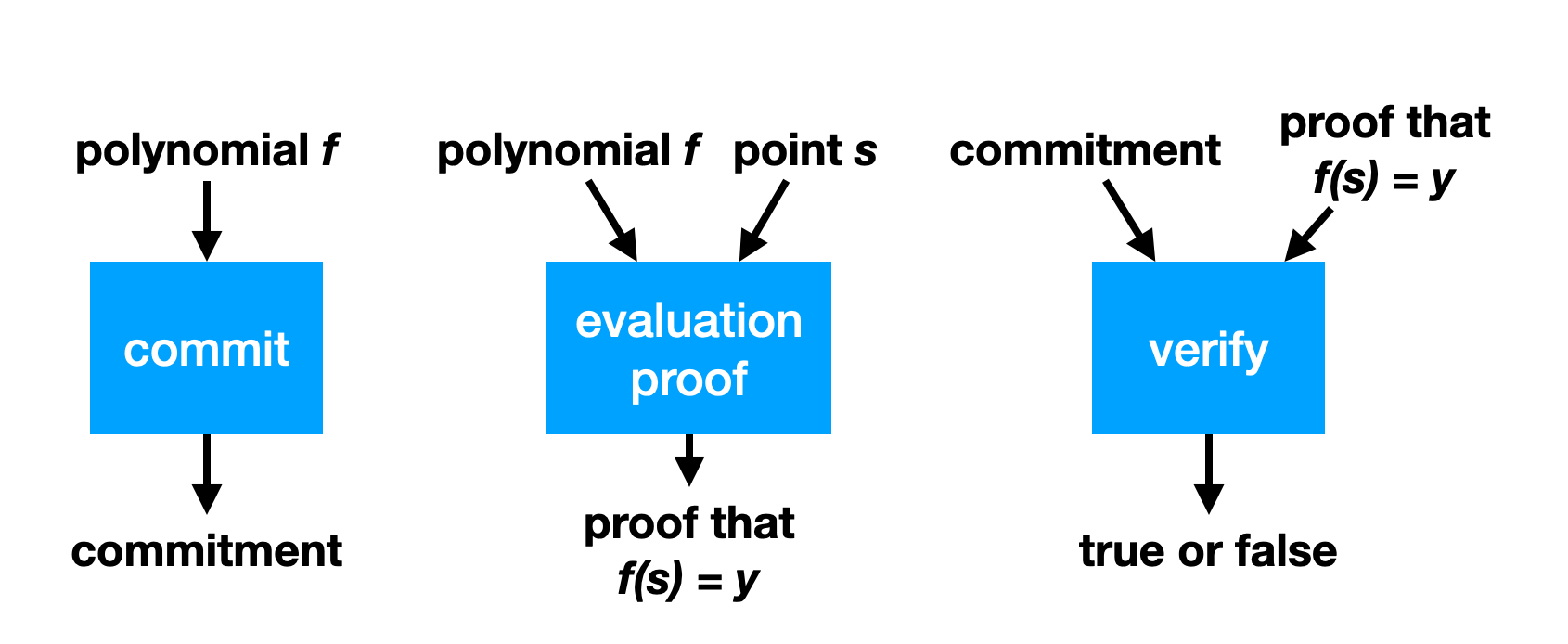

To construct SNARKs we use polynomial commitments. A polynomial commitment scheme for a field (or it could even be a ring) is a way of committing to a polynomial to get a commitment , in such a way that for any , you can provide , along with an “opening proof” that proves that the polynomial committed to in equals when evaluated at .

In other words, it is a type of commitment , a type of randomness , a type of opening proof along with algorithms

such that for any , we have

and if then it is not possible to compute such that

In other words, if then every which is feasible to compute results in .

One thing that’s pretty cool is that because polynomial commitment schemes let you construct zk-SNARKs, polynomial commitment schemes imply commitment schemes with arbitrary opening functionality. TODO

Constructing polynomial commitment schemes

All known constructions of polynomial commitment schemes are a bit complicated. The easiest to describe is called the Kate (pronounced “kah-TAY”) scheme, also known as “KZG”. It requires a “prime-order group with a pairing”, which is three groups of prime order (hence, all isomorphic cyclic groups) together with a function such that for any , , , we have

is called a “pairing” or a “bilinear pairing”. What this lets us do is “multiply in the scalar” but only once.

Fix a degree bound on the polynomials we would like to be able to commit to. The KZG scheme, will let us commit to polynomials in . As a preliminary, fix generators arbitrarily.

The first thing to know about the KZG scheme is it requires that we randomly sample some group elements to help us. This is the dreaded and much discussed trusted setup. So, anyway, we start by sampling at random from and computing for ,

And then throw away . The security depends on no one knowing , which is sometimes referred to as the toxic waste of the trusted setup. Basically we compute the generator scaled by powers of up to the degree bound. We make a security assumption about the groups which says that all anyone can really do with group elements is take linear combinations of them.

Now suppose we have a polynomial with that we would like to commit to. We will describe a version of the scheme that is binding but not hiding, so it may leak information about the polynomial. Now, to commit to , we compute

so that and

So is scaled by and the fact that is an -module (i.e. a vector space whose scalars come from ) means we can compute from the and the coefficients of without knowing .

Now how does opening work? Well, say we want to open at a point to . Then the polynomial vanishes at , which means that it is divisible by the polynomial (exercise, use polynomial division and analyze the remainder).

So, the opener can compute the polynomial

and commit to it as above to get a commitment . And will be the opening proof. It remains only to describe verification. It works like this

This amounts to checking: “is the polynomial committed to equal to the polynomial committed to by times ”?

To see why, remember that , and say and so we are checking

which by the bilinearity of the pairing is the same as checking

Bootleproof inner product argument

Polynomial commitments

A polynomial commitment is a scheme that allows you to commit to a polynomial (i.e. to its coefficients). Later, someone can ask you to evaluate the polynomial at some point and give them the result, which you can do as well as provide a proof of correct evaluation.

Schwartz-Zippel lemma

TODO: move this section where most relevant

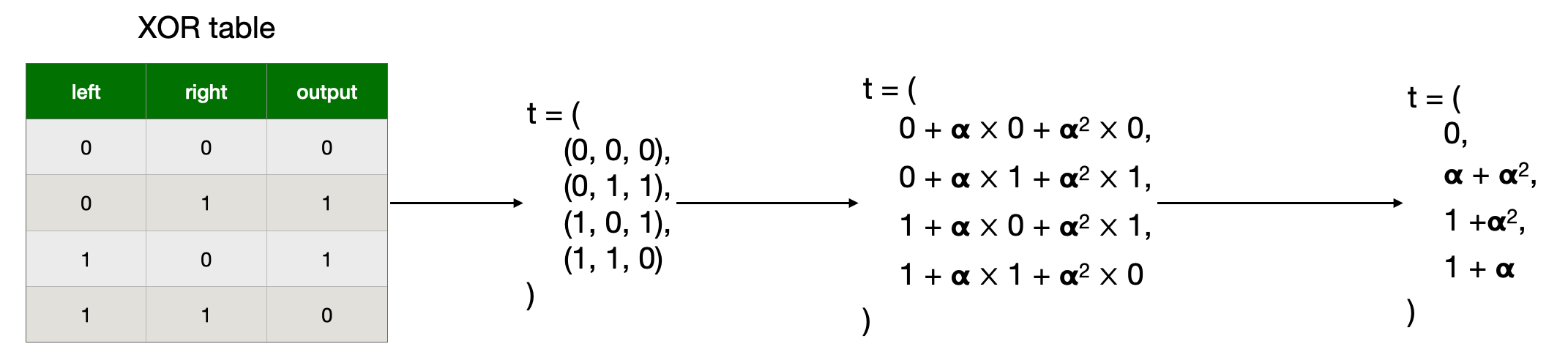

Let be a non-zero polynomial of degree over a field of size , then the probability that for a randomly chosen is at most .

In a similar fashion, two distinct degree polynomials and can at most intersect in points. This means that the probability that on a random is . This is a direct corollary of the Schwartz-Zipple lemma, because it is equivalent to the probability that with

Inner product argument

What is an inner product argument?

The inner product argument is the following construction: given the commitments (for now let’s say the hash) of two vectors and of size and with entries in some field , prove that their inner product is equal to .

There exist different variants of this inner product argument. In some versions, none of the values (, and ) are given, only commitments. In some other version, which is interesting to us and that I will explain here, only is unknown.

How is that useful?

Inner products arguments are useful for several things, but what we’re using them for in Mina is polynomial commitments. The rest of this post won’t make too much sense if you don’t know what a polynomial commitment is, but briefly: it allows you to commit to a polynomial and then later prove its evaluation at some point . Check my post on Kate polynomial commitments for more on polynomial commitment schemes.

How does that translate to the inner product argument though? First, let’s see our polynomial as a vector of coefficients:

Then notice that

And here’s our inner product again.

The idea behind Bootleproof-type of inner product argument

The inner product argument protocol I’m about to explain was invented by Bootle et al. It was later optimized in the Bulletproof paper (hence why we unofficially call the first paper bootleproof), and then some more in the Halo paper. It’s the later optimization that I’ll explain here.

A naive approach

So before I get into the weeds, what’s the high-level? Well first, what’s a naive way to prove that we know the pre-image of a hash , the vector , such that ? We could just reveal and let anyone verify that indeed, hashing it gives out , and that it also verifies the equation .

Obliviously, we have to reveal itself, which is not great. But we’ll deal with that later, trust me. What we want to tackle first here is the proof size, which is the size of the vector . Can we do better?

Reducing the problem to a smaller problem to prove

The inner product argument reduces the opening proof by using an intermediate reduction proof:

Where the size of is half the size of , and as such the final opening proof () is half the size of our naive approach.

The reduction proof is where most of the magic happens, and this reduction can be applied many times ( times to be exact) to get a final opening proof of size 1. Of course the entire proof is not just the final opening proof of size 1, but all the elements involved in the reduction proofs. It can still be much smaller than the original proof of size .

So most of the proof size comes from the multiple reduction subproofs that you’ll end up creating on the way. Our proof is really a collection of miniproofs or subproofs.

One last thing before we get started: Pedersen hashing and commitments

To understand the protocol, you need to understand commitments. I’ve used hashing so far, but hashing with a hash function like SHA-3 is not great as it has no convenient mathematical structure. We need algebraic commitments, which will allow us to prove things on the committed value without revealing the value committed. Usually what we want is some homomorphic property that will allow us to either add commitments together or/and multiply them together.

For now, let’s see a simple non-hiding commitment: a Pedersen hash. To commit to a single value simply compute:

where the discrete logarithm of is unknown. To open the commitment, simply reveal the value .

We can also perform multi-commitments with Pedersen hashing. For a vector of values , compute:

where each is distinct and has an unknown discrete logarithm as well. I’ll often shorten the last formula as the inner product for and . To reveal a commitment, simply reveal the values .

Pedersen hashing allow commitents that are non-hiding, but binding, as you can’t open them to a different value than the originally committed one. And as you can see, adding the commitment of and gives us the commitment of :

which will be handy in our inner product argument protocol

The protocol

Set up

Here are the settings of our protocol. Known only to the prover, is the secret vector

The rest is known to both:

- , a basis for Pedersen hashing

- , the commitment of

- , the powers of some value such that

- the result of the inner product

For the sake of simplicity, let’s pretend that this is our problem, and we just want to halve the size of our secret vector before revealing it. As such, we will only perform a single round of reduction. But you can also think of this step as being already the reduction of another problem twice as large.

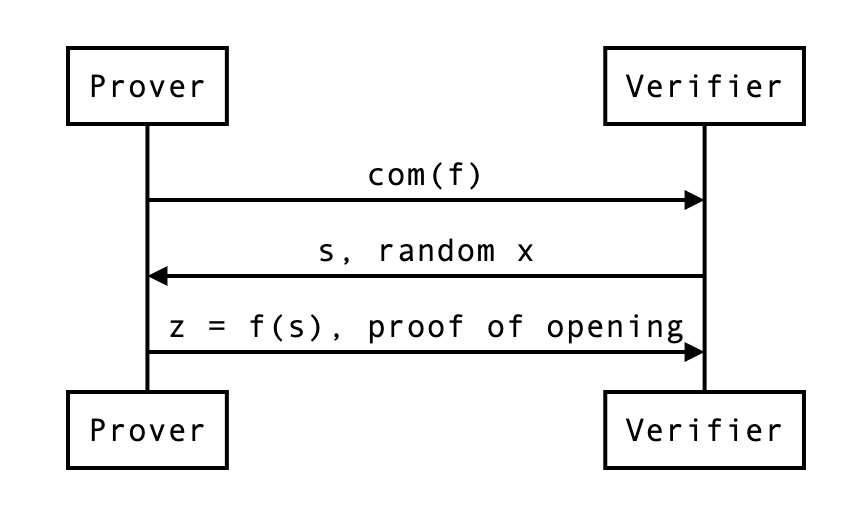

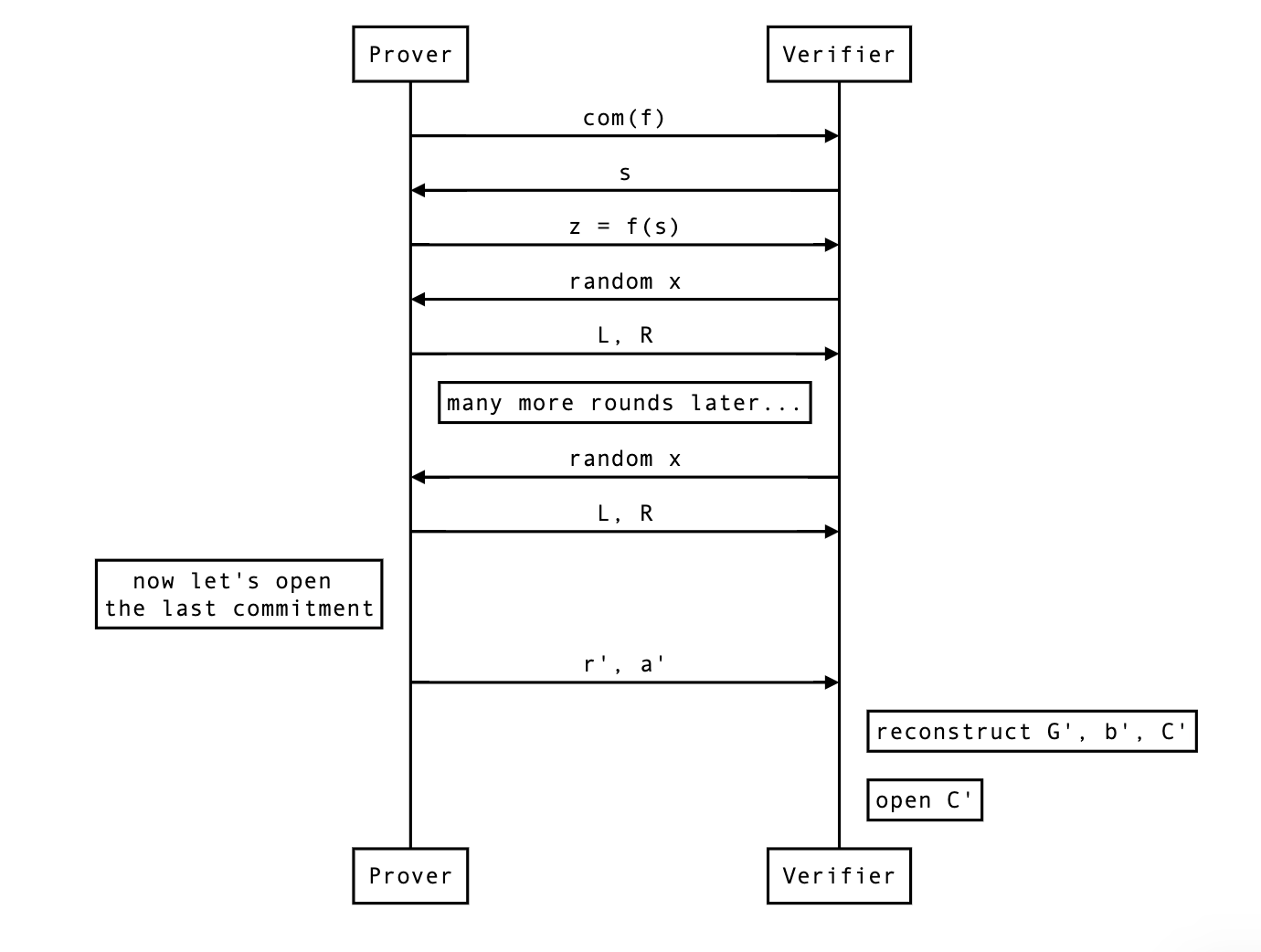

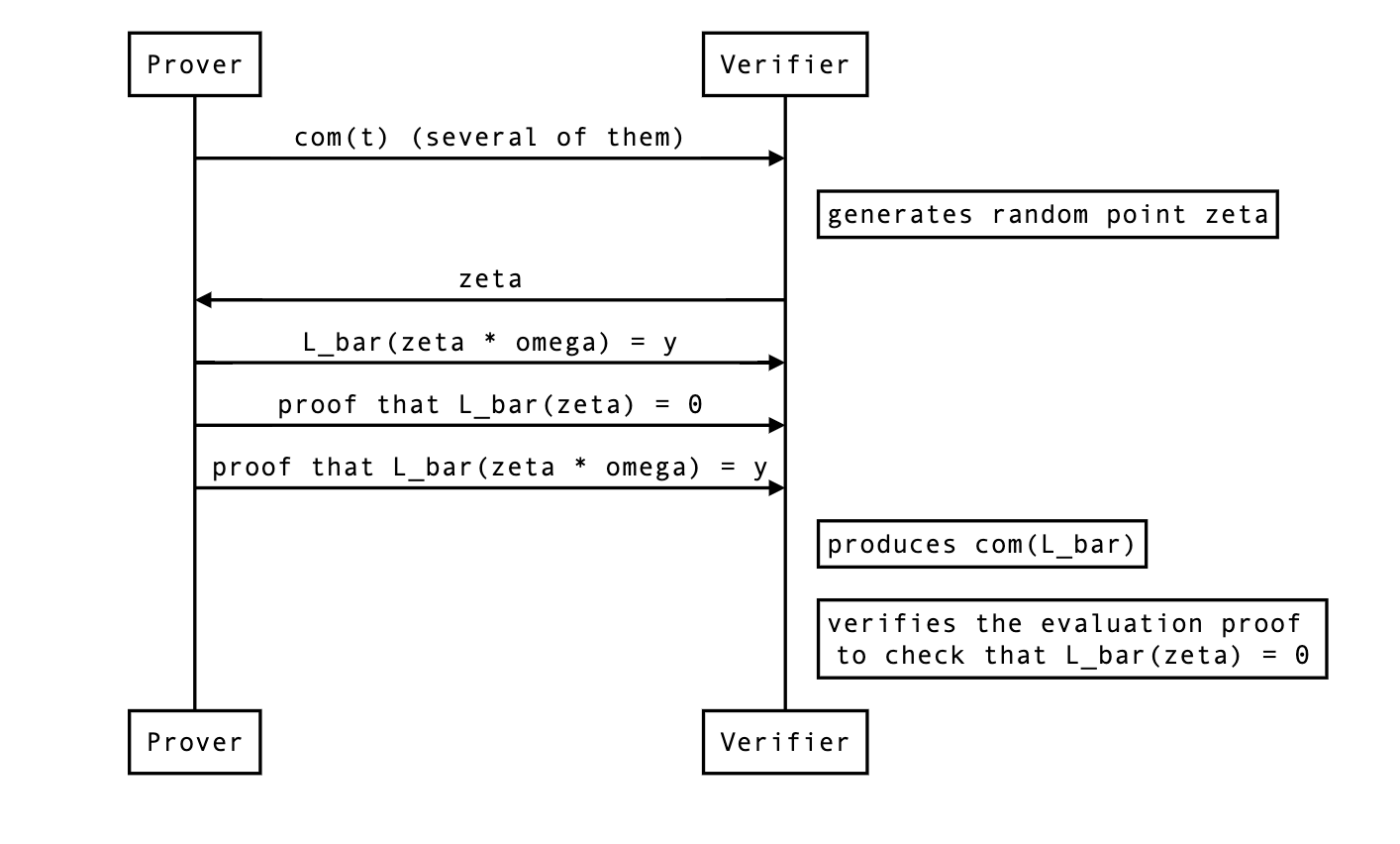

We can picture the protocol as follows:

- The prover first sends a commitment to the polynomial .

- The verifier sends a point , asking for the value . To help the prover perform a proof of correct evaluation, they also send a random challenge . (NOTE: The verifier sends the random challenge ONLY AFTER they receive the )

- The prover sends the result of the evaluation, , as well as a proof.

Does that make sense? Of course what’s interesting to us is the proof, and how the prover uses that random .

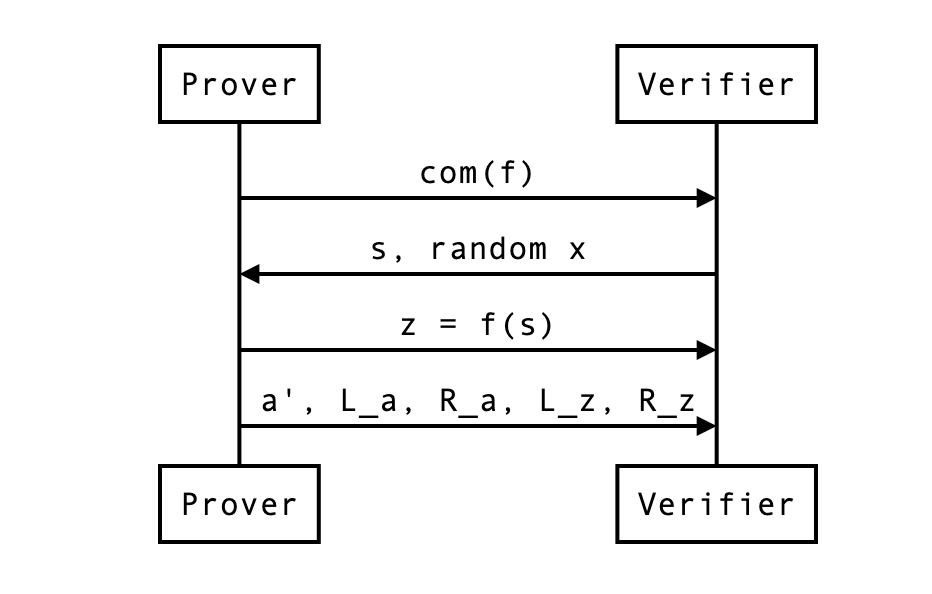

Reduced problem

First, the prover cuts everything in half. Then they use to construct linear combinations of these cuts:

This is how the problem is reduced to .

At this point, the prover can send , , and and the verifier can check if indeed . But that wouldn’t make much sense would it? Here we also want:

- a proof that proving that statement is the same as proving the previous statement ()

- a way for the verifier to compute and and (the new commitment) by themselves.

The actual proof

The verifier can compute as they have everything they need to do so.

What about , the commitment of which uses the new basis. It should be the following value:

So to compute this new commitment, the verifier needs:

- the previous commitment , which they already have

- some powers of , which they can compute

- two curve points and , which the prover will have to provide to them

What about ? Recall:

So the new inner product should be:

Similarly to , the verifier can recompute from the previous value and two scalar values and which the prover needs to provide.

So in the end, the proof has become:

- the vector which is half the size of

- the curve points (around two field elements, if compressed)

- the scalar values

We can update our previous diagram:

In our example, the naive proof was to reveal which was 4 field elements. We are now revealing instead 2 + 2 + 2 = 6 field elements. This is not great, but if was much larger (let’s say 128), the reduction in half would still be of 64 + 2 + 2 = 68 field elements. Not bad no? We can do better though…

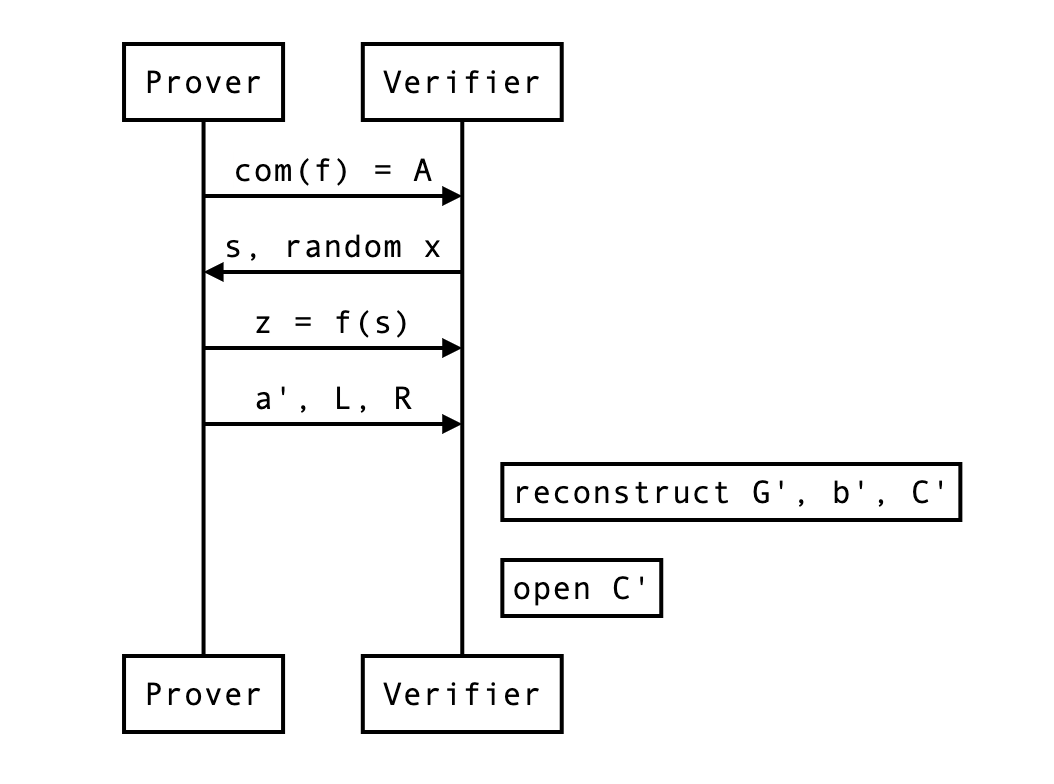

The HALO optimization

The HALO optimization is similar to the bulletproof optimization, but it further reduces the size of our proof, so I’ll explain that directly.

With the HALO optimization, the prover translates the problem into the following:

This is simply a commitment of and .

A naive proof is to reveal and let the verifier check that it is a valid opening of the following commitment. Then, that commitment will be reduced recursively to commitments of the same form.

The whole point is that the reduction proofs will be smaller than our previous bootleproof-inspired protocol.

How does the reduction proof work? Notice that this is the new commitment:

This is simply from copy/pasting the equations from the previous section. This can be further reduced to:

And now you see that the verifier now only needs, in addition to , two curve points (~ 2 field elements):

this is in contrast to the 4 field elements per reduction needed without this optimization. Much better right?

At the end of a round (or the protocol) the verifier can compute the expected commitment as such:

and open it by computing the following and checking it is indeed equal to :

For this, the verifier needs to recompute and by themselves, which they can as they have all the necessary information. We can update our previous diagram:

What about zero-knowledge?

Didn’t we forget something? Oh right, we’re sending in clear, a single element that will leak some information about the original vector (as it is a linear combination of that original vector).

The simple solution is to alter our pedersen commitment to make it hiding on top of being binding:

where H is another generator we don’t know the discrete logarithm of, and is a random scalar value generated by the prover to blind the commitment.

But wait, each and also leaks some! As they are also made from the original secret vector . Remember, No worries, we can perform the same treatment on that curve point and blind it like so:

In order to open the final commitment, the verifier first recomputes the expected commitment as before:

then use and the final blinding value sent by the prover (and composed of and all the rounds’ and ), as well as reconstructed and to open the commitment:

with being equal to something like

At this point, the protocol requires the sender to send:

- 2 curve points and per rounds

- 1 scalar value for the final opening

- 1 blinding (scalar) value for the final opening

But wait… one last thing. In this protocol the prover is revealing , and even if they were not, by revealing they might allow someone to recompute … The HALO paper contains a generalized Schnorr protocol to open the commitment without revealing nor .

from Vanishree:

- So in general the more data we send the more randomness we need to ensure the private aspects are hidden, right

- The randomness in a commitment is because we are sending the commitment elements

- The random elements mixed with the polynomial (in the new zkpm technique) is because we send evaluations of the polynomial at zeta and zeta omega later

- Zk in Schnorr opening is because we reveal the opening values

where can I find a proof? perhaps appendix C of https://eprint.iacr.org/2017/1066.pdf

The real protocol, and a note on non-interactivity

Finally, we can look at what the real protocol end up looking at with rounds of reduction followed by a commitment opening.

So far the protocol was interactive, but you can make it non-interactive by simply using the Fiat-Shamir transformation. That’s all I’ll say about that.

Different functionalities

There’s a number of useful stuff that we can do on top of a bootleproof-style polynomial commitment. I’ll briefly list them here.

Enforcing an upperbound on the polynomial degree

Imagine that you want to enforce a maximum degree on a committed polynomial. You can do that by asking the prover to shift the coefficients of the polynomial to the right, so much that it becomes impossible to fit them if the polynomial were to be more than the maximum degree we wanted to enforce. This is equivalent to the following:

When the verifier verifies the opening, they will have to right shift the received evaluation in the same way.

Aggregating opening proofs for several polynomials

Insight:

Aggregating opening proofs for several evaluations

Insight:

Double aggregation

Insight:

Note that this kind of aggregation forces us to provide all combinations of evaluations, some of which might not be needed (for example, ).

Splitting a polynomial

If a polynomial is too large to fit in one SRS, you can split it in chunks of size at most

Proof of correct commitment to a polynomial

That is useful in HALO. Problem statement: given a commitment , and a polynomial , prove to me that the . The proof is simple:

- generate a random point

- evaluate at ,

- ask for an evaluation proof of on . If it evaluates to as well then is a commitment to with overwhelming probability

Two Party Computation

This section introduces cryptographic primitives and optimizations of two-party computation protocols based on Garbled Circuit (GC) and Oblivious Transfer (OT).

More specifically, this section will cover the following contents.

-

Garbled Circuit

- Including the Free-XOR, Point-and-Permute, Row-Reduction and Half-Gate optimizations.

-

Oblivious Transfer

- Including base OT and OT extension. Note that we focus on maliciously secure OT protocols. The overhead is comparable to protocols with semi-honest security.

-

Two-Party Computation Protocol

- This is the well-known Yao’s 2PC protocol based on GC and OT.

Garbled Circuits

For general-purpose secure computation protocols, we often view functions as arithmetic circuits or boolean circuits. We consider boolean circuits because our applications involve in securely computing ciphers (e.g., AES), which consist of massive boolean gates.

Boolean Circuits

Boolean circuits consists of gates () and gates (). These two gates are universal and we could represent any polynomial-size functions with them. In 2PC protocols, we also use gates () for optimization.

According to the Free-XOR optimization (see the next subsection), the gates and gates are free, then one prefers to represent a function with more and gates, while less gates.

For some commonly used functions (e.g., AES, SHA256), one usually uses hand-optimized circuits for efficiency, and stores them in files. The most popular circuit representation is the Bristol Fashion, which is also used in zkOracles.

The following Bristol fashion circuit is part of AES-128.

36663 36919

2 128 128

1 128

2 1 128 0 33254 XOR

2 1 129 1 33255 XOR

2 1 130 2 33256 XOR

2 1 131 3 33257 XOR

2 1 132 4 33258 XOR

2 1 133 5 33259 XOR

2 1 134 6 33260 XOR

...

2 1 3542 3546 3535 AND

2 1 3535 3459 3462 XOR

2 1 3543 3541 3534 AND

...

1 1 3452 3449 INV

...

The first line

36663 36919

means that the entire circuit has 36663 gates and 36919 wires.

The second line

2 128 128

means that the circuit has 2 inputs, both inputs have 128 bits.

The third line

1 128

means that the circuit has 1 output, and the length is 128 bits.

The following lines are the gates in the circuit. For example

2 1 128 0 33254 XOR

means that this gate has a fan-in of 2 and fan-out of 1. The first input wire is 128 (the number of wires), the second input wire is 0, and the output wire is 33254. The operation of this gate is .

2 1 3542 3546 3535 AND

means that this gate has a fan-in of 2 and fan-out of 1. The first input wire is 3542, the second input wire is 3546, and the output wire is 3535. The operation of this gate is .

1 1 3452 3449 INV

means that this gate has a fan-in of 1 and fan-out of 1. The input wire is 3452, and the output wire is 3449. The operation of this gate is

Basics

Basics

Garbled circuit is a core building block of two-party computation. The invention of garbled circuit was credited to Andrew Yao, with plenty of optimizations the state-of-the-art protocols are extremely efficient. This subsection will first introduce the basic idea of garbled circuit.

Garbled circuit involves two parties: the garbler and the evaluator. The garbler takes as input the circuit and the inputs, generates the garbled circuit and encoded inputs. The evaluator takes as input the garbled circuit and encoded inputs, evaluates the garbled circuit and decodes the result into outputs.

The security of garbled circuit ensures that the evaluator only gets the outputs without any additional information.

Given a circuit and related inputs, the garbler handles the circuit as follows.

- For each gate in the circuit , the garbler writes down the truth table of this gate. Taking an gate for example. The truth table is as follows. Note that for different gates, the wires may be different.

- The garbler chooses two uniformly random -bit strings for each wire. We call these strings the truth labels. Label represents the value and label represents the value . The garbler replaces the truth table with the following label table.

- The garbler turns this label table into a garbled gate by encrypting the labels using a symmetric double-key cipher with labels as the keys. The garbler randomly permutes the ciphertexts to break the relation between the label and value.

| Garbled Gate |

|---|

-

The garbled circuit consists of all garbled gates according to the circuit.

-

Let be the input, where , let be the wires of the input. The garbler sends and to the evaluator. The garbler also reveals the relation of the output labels and the truth values. For example, for each output wire, the label is chosen by setting the least significant bit to be the truth value.

Given the circuit , the garbled circuit and the encoded inputs , the evaluator does the following.

-

Uses the encoded inputs as keys, the evaluator goes through all the garbled gates one by one and tries to decrypt all the four ciphertexts according to the circuit.

-

The encryption scheme is carefully designed to ensure that the evaluator could only decrypt to one valid message. For example, the label is encrypted by padding , and the decrypted message contains is considered to be valid.

-

After the evaluator gets all the labels of the output wires, he/she extracts the output value. For example, just take the least significant bit of the label.

Note that the above description of garbled circuit is very inefficient, the following subsections will introduce optimizations to improve it.

Point and Permute

As described in the last subsection, the evaluator has to decrypt all the four ciphertexts of all the garbled gates, and extracts the valid label. This trial decryption results in 4 decryption operations and ciphertext expansion.

The point-and-permute optimization of garbled circuit in BMR90 only needs 1 decryption and no ciphertext expansion. It works as follows.

-

For the two random labels of each wire, the garbler assigns uniformly random

colorbits to them. For example for the wire , the garbler chooses uniformly random labels , and then sets . Note that the randomcolorbits are independent of the truth bits. -

Then the garbled gate becomes the following. Suppose the relation of the labels and

colorbits are , ,

| Color Bits | Garbled Gate |

|---|---|

- Reorder the 4 ciphertexts canonically by the color bits of the input labels as follows.

| Color Bits | Garbled Gate |

|---|---|

- When the evaluator gets the input label, say , the evaluator first extracts the

colorbits and decrypts the corresponding ciphertext (the third one in the above example) to get an output label.

Encryption Instantiation

The encryption algorithm is instantiated with hash function (modeled as random oracle) and one-time pad. The hash function could be truncated . The garbled gate is then as follows.

| Color Bits | Garbled Gate |

|---|---|

For security and efficiency reasons, one usually uses tweakable hash functions: , where is public and unique for different groups of calls to . E.g., could be the gate identifier. Then the garbled gate is as follows. The optimization of tweakable hash functions is given in following subsections.

| Color Bits | Garbled Gate |

|---|---|

Free XOR

The Free-XOR optimization significantly improves the efficiency of garbled circuit. The garbler and evaluator could handle gates for free! The method is given in KS08.

-

The garbler chooses a global uniformly random string with .

-

For any gate with input wires and output wire , the garbler chooses uniformly random labels and .

-

Let and denote the 0 label and 1 label for input wire , respectively. Similarly for wire .

-

Let and denote the 0 label and 1 label for input wire , respectively.

-

The garbler does not need to send garbled gate for each gate. The evaluator locally s the input labels of gate to gets the output label. This is correct because given a gate, the label table could be as follows.

Here are some remarks of the Free-XOR optimization.

-

Free XOR is compatible with the point-and-permute optimization because .

-

The garbler should keep private all the time for security reasons.

-

Garbling gate is the same as before except that all the labels are of the form for uniformly random .

Row Reduction

With the Free-XOR optimization, the bottleneck of garbled circuit is to handle gates. Row reduction aims to reduce the number of ciphertexts of each garbled gate. More specifically, it reduces ciphertexts into . The optimization is given in the NPS99 paper.

To be compatible with the Free-XOR optimization, the garbled gates are of the following form (still use the example in the point-and-permute optimization).

| Color Bits | Garbled Gate |

|---|---|

Since is modeled as a random oracle, one could set the first row of the above garbled gate as , and then we could remove that row from the garbled gate. This means that we could choose . Therefore, the garbled circuit is changed as follows.

| Color Bits | Garbled Gate |

|---|---|

The evaluator handles garbled gates as before, except he/she directly computes the hash function if the color bits are

Half Gate

The Half-Gate optimization further reduces the number of ciphertexts from to . This is also the protocol used in zkOracles, and more details of this algorithm will be given in this subsection. The algorithm is presented in the ZRE15 paper.

We first describe the basic idea behind half-gate garbling. Let’s say we want to compute the gate . With the Free-XOR optimization, let and denote the input wire labels to this gate, and denote the output wire labels, with each encoding .

Half-gate garbling contains two case: when the garbler knows one of the inputs, and when the evaluator knows one of the inputs.

Garbler half-gate. Considering the case of an gate , where are intermediate wires in the circuit and the garbler somehow knows in advance what the value will be.

When , the garbler will garble a unary gate that always outputs . The label table is as follows.

Then the garbler generates two ciphertexts:

When , the garbler will garble a unary identity gate. The label table is as follows.

Then the garbler generates two ciphertexts:

Since is known to the garbler, the two cases shown above could be unified as follows:

These two ciphertexts are then suitably permuted according to the color bits of . The evaluator takes a hash of its wire label for and decrypts the appropriate ciphertext. If , he/she obtains output wire label in both values of . If the evaluator obtains either or , depending on the bit . Intuitively, the evaluator will never know both and , hence the other ciphertext appears completely random.

By applying the row-reduction optimization, we reduce the number of ciphertexts from to as follows.

Evaluator half-gate. Considering the case of an gate , where are intermediate wires in the circuit and the evaluator somehow knows the value at the time of evaluation. The evaluator can behave differently based on the value of .

When , the evaluator should always obtain output wire label , then the garbled circuit should contains the ciphertext:

When , it is enough for the evaluator to obtain . He/she can then with the other wire label (either or ) to obtain either or . Hence the garbler should provide the ciphertext:

Combining the above two case together, the garbler should provide two ciphertexts:

Note that these two ciphertext do NOT have to be permuted according to the color bit of , because the evaluator already knows . If , the evaluator uses the wire label to decrypt the first ciphertext. If , the evaluator uses the wire label to decrypt the second ciphertext and s the result with the wire label for .

By applying the row-reduction optimization, we reduce the number of ciphertexts from to as follows.

Two halves make a whole. Considering the case to garble an gate , where both inputs are secret. Consider:

Suppose the garbler chooses uniformly random bit . In this case, the first gate can be garbled with a garbler-half-gate. If we further arrange for the evaluator to learn the value , then the second gate can be garbled with an evaluator-half-gate. Leaking this extra bit to the evaluator is safe, as it carries no information about the sensitive value . The remaining gate is free and the total cost is ciphertexts.

Actually the evaluator could learn without any overhead. The garbler choose the color bit of the 0 label on wire . Since that color bit is chosen uniformly, it is secure to use it. Then when a particular value is on that wire, the evaluator will hold a wire label whose color bit is .

Let be the labels for , let . is the color bit of the wire, is a secret known only to the garbler. When the evaluator holds a wire label for whose color bit is , the label is , corresponding to truth value .

Full Description

Garbling. The garbling algorithm is described as follows.

-

Let , be the set of input and output wires of , respectively. Given a non-input wire , let denote the two input wires of associated to an or gate. Let denote the input wire of associated to an gate. The garbler maintains three sets , and . The garbler also maintains a initiated as .

-

Choose a secret global 128-bit string uniformly, and set . Let be the label of public bit , where is chosen uniformly at random. Note that will be sent to the evaluator.

-

For , do the following.

- Choose -bit uniformly at random, and set .

- Let and insert it to .

-

For any non-input wire , do the following.

- If the gate associated to is a gate.

- Let .

- Compute and .

- If the gate associated to is an gate.

- Let ,

- Compute and .

- If the gate associated to is an gate.

- let ,

- Let , .

- Compute the first half gate:

- Let .

- Let .

- .

- Compute the second half gate:

- Let .

- Let

- Let , and insert the garbled gate to .

- .

- If the gate associated to is a gate.

-

For , do the following.

- Compute .

- Insert into .

-

The garbler outputs .

Input Encoding. Given , the garbler encodes the input as follows.

- For all , compute

- Outputs .

Evaluating. The evaluating algorithm is described as follows.

-

The evaluator takes as input the garbled circuit and the encoded inputs .

-

The evaluator obtains the input labels from and initiates .

-

For any non-input wire , do the following.

- If the gate associated to is a gate.

- Let .

- Compute .

- If the gate associated to is an gate.

- Let ,

- Compute .

- If the gate associated to is an gate.

- Let ,

- Let , .

- Parse as .

- Compute .

- .

- Compute .

- Let .

- If the gate associated to is a gate.

-

For , do the following.

- Let .

- Outputs , the set of .

Output Decoding. Given and , the evaluator decodes the labels into outputs as follows.

- For any , compute .

- Outputs all .

Fixed-Key-AES Hashes

Using fixed-key AES as a hash function in this context can be traced back to the work of Bellare et al. BHKR13, who considered fixed-key AES for circuit garbling. Prior to that point, most implementations of garbled circuits used a hash function such as , modelled as a random oracle. But Bellare et al. showed that using fixed-key AES can be up to faster than using a cryptographic hash function due to hardware support for AES provided by modern processors.

Prior to BHKR13 CPU time was the main bottleneck for protocols based on circuit garbling; after the introduction of fixed-key cipher garbling, network throughput became the dominant factor. For this reason, fixed-key AES has been almost universally adopted in subsequent implementations of garbled circuits.

Several instantiations of hash function based on fix-key AES are proposed inspired by the work of Bellare et al. However, most of them have some security flaws as pointed out by GKWY20. (GKWY20) also proposes a provable secure instantiation satisfies the property called Tweakable Circular Correlation Robustness (TCCR). More discussions about the concrete security of fixed-key AES based hash are introduced in GKWWY20.

The TCCR hash based on fixed-key AES is defined as follows.

where is a -bit string, a public -bit , and for any fixed-key .

Oblivious Transfer

Oblivious transfer (OT) protocol is an essential tool in cryptography that provides a wide range of applications in secure multi-party computation. The OT protocol has different variants such as -out-of-, -out-of- and -out-of-. Here we only focus on -out-of-.

An OT protocol involves two parties: the sender and the receiver. The sender has strings, whereas the receiver has a chosen bit. After the execution of this OT protocol, the receiver obtains one of the strings according to the chosen bit, but no information of the other string. Then sender get no information of the chosen bit.

Sender Receiver

+----------+

(x_0,x_1) ------->| |<------ b

| OT Prot. |

| |-------> x_b

+----------+

Due to a result of Impagliazzo and Rudich in this paper, it is very unlikely that OT is possible without the use of public-key cryptography. However, OT can be efficiently extended. That is, starting with a small number of base OTs, one could create many more OTs with only symmetric primitives.

The seminal work of IKNP03 presented a very efficient protocol for extending OTs, requiring only black-box use of symmetric primitives and base OTs, where is the security parameter. This doc focuses on the family of protocols inspired by IKNP.

Base OT

We introduce the CO15 OT as the base OT.